Why Functional Programming and Big Data go hand-in-hand

Big data workflows involve transforming large amounts of information, often unstructured, into data science driven insights, or highly available data stores used by applications. In order to create a big data store – we often have to define several tasks required to accomplish this transformation.

Initially, many of us might envision such transformations as a flow of states. This sort of thinking, while simplistic, is dangerous. The reason is because (1) state is hard to maintain (2) understanding state requires defining and persisting transformations along side the state itself and (3) when dealing with large data sets, reprocessing is required if algorithms need to change – and that reprocessing is both costly from a computational perspective, as well as in terms of raw time wasted waiting for completion of a batch workflow.

For example, lets say we want to take a list of conversations, and extract human entites (names) from them and output a list of human entites who appear to be linked to one another (i.e. joe and mary are linked if they have had a recent conversation). If we broke this into a linear flow of tasks, it might take more than a day to process all this information if we have, say, a pedabyte of raw text sitting on a computation cluster of 16 standard machines.

We can define these transformations imperatively, using a low-level framework like Hadoop’s MapReduce. Alternatively, we can define it using flows (i.e. using a framework such as Cascading/Cascalog, which is based off of the declarative programming paradigm).

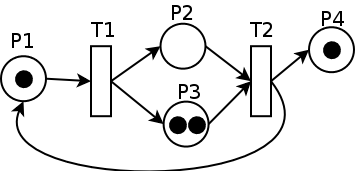

The Imperative Approach : Finite State Machines

Lets consider the above “finite state machine” approach (in the real world, this might correspond to a MapReduce application with several jobs). The simplest way to design a workflow for such extraction might involve creating a two step workflow. We start with a large set of text documents. The first step would “clean” conversations, outputting plain text words. The second step would involve linking and deduplicated the parties involved by using joins, for example, via MapReduce.

The advantages of this – all data is entirely processed when the flow is completed, and it is extremely performant in the best case scenario, because we can stream through large documents very efficiently in a batch workflow, due to the minimization of latency of starting and stopping. Its “easy” to explain to someone – there is “step 1”, “step 2”, and so on. However, the disadvantage is that it is difficult to redirect and modify.

The disadvantages of this approach is that its, essentially, all-or-nothing. If something fails during the state transformations, because we are processing all data in batch, we will potentially have to recompile our source code, and restart the workflow from scratch.

The alternative: Rather than envisioning this problem as a two step transformation, we could envision it as a flow of data transformations, or as a single, infintely running work queue.

Once we get away from the imperative, state-based view of the problem, we find that the conceptual roots for any solution will be much more well-aligned with declarative, functional programming idioms, rather than imperative ones. Languages such as Erlang and Lisp give us the basis for dealing with long running, evolving systems.

For example, in a functionally inspired solution to this problem we might design the following architecture:

- Documents are continuously preprocessed (unimportant words and characters are removed) and put into a key value store. The key here is the document.

- Documents are marked as processed or unprocessed. This can be done, also, using a key value store.

- Entity relations are extracted from documents and placed into a graph database in a separate process.

- A thread continually listens to document transformation events. When a document is transformed, this thread adds its key to the top of a work queue.

- When the algorithm for entity extraction is updated, the graph database can be deleted, and all documents can be lazily marked as unprocessed.

Examples of transformative, rather than iterative big-data workflows :

- For an even more sophisticated perspective on real-time, dynamic processing of large data sets, watch Nathan Marz’s talk on storm https://www.youtube.com/watch?v=cF8a_FZwULI. Storm allows for iterative, fault-tolerant processing of streams with real-time groupings and feed splitting, providing an elegant and highly scalable (in terms of computation, as well in terms of throughput).

- Another alternative to imperatively defined data processes is elegantly implemented in berkeley’s “Spark” platform. The spark platform implements fault tolerance by virtue of the fact that lost nodes can be reclaimed by re-application of the functional definitions originally used to define data transformations (see http://vimeo.com/20757432).

The advantage of the latter approach is that as soon as our system starts, it begins producing data – and if we decide to improve our algorithms while processing, that is very easy to do – because at any given time we can choose to begin reprocessing documents on our work queue (of course, in order to implement this effectively we would need to have logic for avoiding addition of duplicate data to the system). There are a few obvious disadvantages including best-case performance drop offs. Overall, however, this sort of message driven architecture proves to be extremely valuable in many industrial-strength big-data applications (for example, chat rooms, high volume websites with a need for asynchronous workflows, bank and financial systems, etc…).

This highly decoupled architecture allows for continuous improvement without downtime.

Technically? In a sophisticated, highly dynamic and concurrent scenario, a language such as Erlang becomes extremely relevant. But why? Because a complex, concurrent system with high levels of uptime needs to be fault tolerant and robust (meaning that individual failures don’t cause the entire system to go down). How can we make a system robust? By making it dynamic. Adding a dynamic element to a concurrent work queue allows us to continuously improve and fine tune the algorithms we are using to extract entities without causing downtime. It also gaurantees that, at any given moment, data is continually being processed – so we don’t waste our large cluster resources by forcing them to sit idle while rebuilding or retooling libraries.

Why is this sort of problem solving less common in imperative langauges?

We’ve all heard of these new-fangled startups (flightcaster, mixpanel, etc…) who have chosen to use functional languages to solve scalability problems. And we inevitably ask ourselves…

Can’t you just implement that fancy stateless architecture in Java ? Aren’t all programs simply state-machines which can be reduced to the same exact computational model? Why do we need a new language?

The reason why functional languages encourage scalable software architectures is because of the fact that they, from the bottom up, eliminate as much shared state as possible from a language. This leads to scalable “micro” components. Those micro components then scale naturally into larger components.

The idea that micro-scalable application is macro-scalable is not hot-air. Scalability is a bottom-up phenomenon. The largest systems in the world evolved this way, including Life.

Life is designed not from the top-down, but rather from the bottom up. Each cell is autonomous. All cellular systems are coordinated by message passing, as opposed to hierarchically. When cells don’t communicate effectively, or stop listening to each other, – we get cancer (for example, the kinase and signalling profiles of tumor cells are highly perturbed, where as the internal physiology is conserved).

Back to programming langauges: What does bottom up scalability give you?

Fault tolerance means that a system can continue working even through failures. There are two ways to do this: predict failures and prescribe the desired error handling, or simply build “ready-to-fail” logic into an application from the beginning. In order to allow for failure, you have to allow an application to continually restart itself and, importantly, to continue to learn and pick up incremental improvements in source code. This is exceedingly complex in any pre-compiled / non functional language.

- Robust? You have to manage memory and state manually. This is great for micro-optimization. But when building large concurrent systems, micro optimizations only provide you with a small constant time speed up, which is offset drastically by the scalability benefits gleaned from transparently scalable, concurrent algorithms. Furthermore : an application with explicit memory management and complex state will require more “steps” and increasingly complicated failure handling (stateless operations have gauranteed atomicity properties which are inherent in the language itself).

- Dynamic? You have to write your own framework for converting messages into operations (that is, for example, you can’t directly send java classes around as executables at runtime). Languages like clojure and erlang support the passing around of dynamic functionality from the ground up: You can inject hotfixes in a running system much more naturally than you would in a structured language.