Hacking with devstack in a VM

When I first started working on OpenStack I spent too much time trying to find a good development environment. I even started a series of blog posts (that I never finished) talking about how I rolled my own development environments. I had messed around with devstack a bit, but I had a hard time configuring and using it in a way that was comfortable for me. I have since rectified that problem and I now have a methodology for using devstack that works very and saves me much time. I describe that in detail in this post.

Run devstack in a VM

For whatever reason, there may be a temptation may be to run devstack locally (easier access to local files, IDE, debugger, etc), resist this temptation. Put devstack in a VM.

Here are the steps I recommend for your devstack VM

- Create a fedora 18 qcow2 base VM

- Create a child VM from that base

- Run devstack in the child VM

In this way you always have a fresh, untouched fedora 18 VM (the base image) around for creating new child images which can be used for additional disposable devstack VMs or whatever else.

Create the base VM

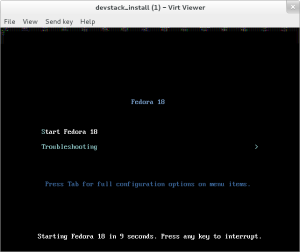

The following commands will create a Fedora 18 base VM.

qemu-img create -f qcow2 -o preallocation=metadata devstack_base.qcow2 12G wget http://fedora.mirrors.pair.com/linux/releases/18/Live/x86_64/Fedora-18-x86_64-Live-Desktop.iso virt-install --connect qemu:///system -n devstack_install -r 1024 --disk path=`pwd`/devstack_base.qcow2 -c `pwd`/Fedora-18-x86_64-Live-Desktop.iso --vnc --accelerate --hvm

At this point a window will open that looks like this:

Simply follow the installation wizard just as you would when installing Fedora on bare metal. When partitioning the disks make sure that the swap space is not bigger than 1GB Once it is complete run the following commands:

sudo virsh -c qemu:///system undefine devstack_install sudo chown <username>:<username> devstack.qcow2

Import into virt-manager

From here on I recommend using the GUI program virt-manager. It is certainly possible to do everything from the command line but virt-manager will make it a lot easier.

Find the Create a new virtual machine button (shown below) and click it:

This will open a wizard that will walk you through the process. In the first dialog click on Import existing disk image as shown below

Once this is complete run your base VM. At this point you will need to complete the Fedora installation wizard.

Before you can ssh into this VM you need to determine its IP address and enable sshd in it. To do this log in via the display and get a shell. Run the following commands as root:

sudo service sshd enable sudo service sshd start

You can determine the IP address by running ifconfig. In the sample session below note that the VMs IP address is 192.168.122.52

ssh into the VM and install commonly used software with the following commands:

ssh root@192.168.122.52 yum update -y yum install -y python-netaddr git python-devel python-virtualenv telnet yum groupinstall 'Development Tools'

You now have a usable base VM. Shut it down.

Create the Child VM

The VM we created above will serve as a solid clean base upon which you can create disposable devstack VMs quickly. In this way you will know that your environment is always clean.

Create the child VM from the base:

qemu-img create -b devstack_base.qcow2 -f qcow2 devstack.qcow2

Again import this child into virt-manager. Configure it with at least 2GB of RAM. When you get to the final screen you have to take additional steps to make sure that virt-manager knows this is a qcow2 image. Make sure that the Customize configuration before install option is selected (as shown below) and the click on Finish.

In the next window find Disk 1 on the left hand side and click it. Then on the right hand side find Storage format and make sure that qcow2 is select. An example screen is below:

Now click on Begin Installation and your child VM will boot up. Just as you did with the base VM, determine its IP address and log in from a host shell.

Install devstack

Once you have have the VM running ssh into it. devstack cannot be installed as root so be sure to add a user that has sudo privileges. Then log into that users account and run the following commands (note that the first 2 commands are working around a problem that I have observed with tgtd).

mv /etc/tgt/conf.d/sample.conf /etc/tgt/conf.d/sample.conf.back service tgtd restart git clone git://github.com/openstack-dev/devstack.git cd devstack ./stack.sh

devstack will now ask for a bunch of passwords, just click enter for them all and wait (a long time) for the script to finish. When it ends you should see something like the following:

Horizon is now available at http://192.168.122.127/

Keystone is serving at http://192.168.122.127:5000/v2.0/

Examples on using novaclient command line is in exercise.sh

The default users are: admin and demo

The password: 5b63703f25be4225a725

This is your host ip: 192.168.122.127

stack.sh completed in 172 seconds.

Hacking With devstack

If the above was painful do not fret, you never have to do it again. You may choose to create more child VMs, but for the most part you can use your single devstack VM over and over.

Checkout devstack

In order to run devstack commands you have to first set some environment variables. Fortunately devstack has a very convenient script for this named openrc. You can source it as the admin user or the demo user. Here is an example of setting up an environment for using OpenStack sell commands as the admin user and admin tenant:

. openrc admin admin

it is that easy! Now lets run a few OpenStack commands to make sure it works:

[jbresnah@localhost devstack]$ . openrc admin admin [jbresnah@localhost devstack]$ glance image-list +--------------------------------------+---------------------------------+-------------+------------------+----------+--------+ | ID | Name | Disk Format | Container Format | Size | Status | +--------------------------------------+---------------------------------+-------------+------------------+----------+--------+ | a3e245c2-c8fa-4885-9b2e-2fc2e5f358a1 | cirros-0.3.1-x86_64-uec | ami | ami | 25165824 | active | | e6554b2a-cc75-42bf-8278-e3fc3f97501b | cirros-0.3.1-x86_64-uec-kernel | aki | aki | 4955792 | active | | f2750476-4125-46f1-8339-f94140c40ba3 | cirros-0.3.1-x86_64-uec-ramdisk | ari | ari | 3714968 | active | +--------------------------------------+---------------------------------+-------------+------------------+----------+--------+ [jbresnah@localhost devstack]$ glance image-show cirros-0.3.1-x86_64-uec +-----------------------+--------------------------------------+ | Property | Value | +-----------------------+--------------------------------------+ | Property 'kernel_id' | e6554b2a-cc75-42bf-8278-e3fc3f97501b | | Property 'ramdisk_id' | f2750476-4125-46f1-8339-f94140c40ba3 | | checksum | f8a2eeee2dc65b3d9b6e63678955bd83 | | container_format | ami | | created_at | 2013-07-26T23:20:18 | | deleted | False | | disk_format | ami | | id | a3e245c2-c8fa-4885-9b2e-2fc2e5f358a1 | | is_public | True | | min_disk | 0 | | min_ram | 0 | | name | cirros-0.3.1-x86_64-uec | | owner | 6f4bdfaac28349b6b8087f51ff963cd5 | | protected | False | | size | 25165824 | | status | active | | updated_at | 2013-07-26T23:20:18 | +-----------------------+--------------------------------------+ [jbresnah@localhost devstack]$ glance image-download --file local.img a3e245c2-c8fa-4885-9b2e-2fc2e5f358a1 [jbresnah@localhost devstack]$ ls -l local.img -rw-rw-r--. 1 jbresnah jbresnah 25165824 Jul 26 19:29 local.img

In the above session we listed all of the images that are registered with Glance, got specific details on one of them, and then downloaded it. At this point you can play with the other OpenStack clients and components as well.

Screen

devstack runs all of the OpenStack components under screen. You can attach to the screen session by running:

screen -r

You should now see something like the following:

notice all of the entries on the bottom screen toolbar. Each one of these is a session running an OpenStack service. The output is a log from that service. To toggle through them hit <ctrl+a+space>.

Making a Code Change

In any given screen session you can hit <ctrl+c> to kill a service, and then <up arrow> <enter> to restart it. The current directory is the home directory of the python source code as if you had checked it out from git. You can make changes in that directory and you do not need to install them in any way. Simply kill the service (<ctrl+c>), make your change, and then restart it (<up arrow>.<enter>).

In the following screen cast you see me do the following:

- Connect to the devcast screen session

- toggle over to the glance-api session

- kill the session

- alter the configuration

- make a small code change

- restart the service

- verify the change