Long time, no see huh!!! This post has been pending on my part for a while now, partly because I was busy and partly because I am that lazy. But it’s a fairly important post as it talks about snapshotting the

GlusterFS volumes. So what are these snapshots and why are they so darn important. Let’s find out…

Wikipedia says, ‘

a snapshot is the state of a system at a particular point in time‘. In filesystems specifically, a snapshot is a ‘backup’ (a read only copy of the data set frozen at a point in time). Obviously, it’s not a full backup of the entire dataset, but it’s a backup nonetheless, which makes it pretty important. Now moving on to GlusterFS snapshots. GlusterFS snapshots, are point-in-time, read-only, crash consistent copies, of GlusterFS volumes. They are also online snapshots, and hence the volume and it’s data continue to be available to the clients, while the snapshot is being taken.

GlusterFS snapshots are thinly provisioned LVM based snapshots, and hence they have certain pre-requisites. A quick look at the

product documentation tells us what those pre-requisites. For a GlusterFS volume, to be able to support snapshots, it needs to meet the following pre-requisites:

- Each brick of the GlusterFS volume, should be on an independent, thinly-provisioned LVM.

- A brick’s lvm should not contain any data other than the brick’s.

- None of the bricks should be on a thick LVM

- gluster version should be 3.6 and above (duh!!)

- The volume should be started.

- All brick processes must be up and running.

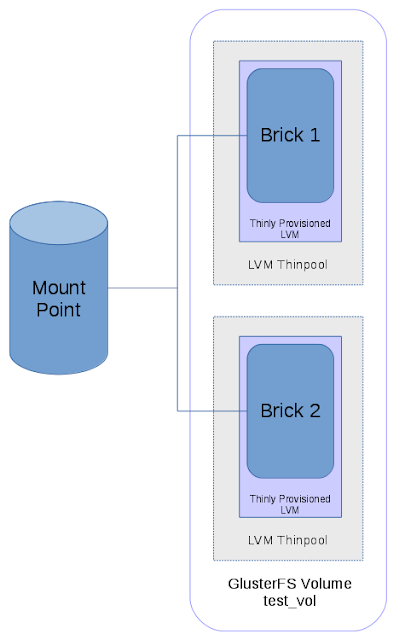

Now that I have laid out the rules above, let me give you their origin story as well. As in, how do the GlusterFS snapshots internally enable you to take a crash-consistent backup using thinly-provisioned LVM in a space efficient manner. We start by having a look at a GlusterFS volume, whose bricks are on independent, thinly-provisioned LVMs.

In the above diagram, we can see that GlusterFS volume test_vol comprises of two bricks, Brick1 and Brick2. Both the bricks are mounted on independent, thinly-provisioned LVMs. When the volume is mounted, the client process maintains a connection to both the bricks. This is as much summary of GlusterFS volumes, as is needed for this post. A GlusterFS snapshot, is also internally a GlusterFS volume with the exception that, it is a read-only volume and it is treated differently than a regular volume in certain aspects.

When we take a snapshot (say snap1) of the GlusterFS volume test_vol, following set of things happen in the background:

- It is checked if the volume is in started state, and if so are all the brick processes up and running.

- At this point in time, we barrier certain fops, in order to make the snapshots crash-consistent. What it means is even though it is an online snapshot, certain write fops will be barriered for the duration of the snapshot. The fops that are on the fly when the barrier is initiated will be allowed to complete, but the acknowledgement to the client will be pending till the snapshot creation is complete. The barriering has a default time-out window of 2 mins, within which if the snapshot is not complete, the fops are unbarriered, and we fail that particular snapshot.

- After successfully barriering fops on all brick processes, we proceed to take individual copy-on-write LVM snapshots of each brick. A copy-on-write snapshot LVM snapshot ensures a fast, space-efficient backup of the data currently on the brick. These LVM snapshots reside in the same LVM thinpool as the GLusterFS brick LVMs.

- Once this snapshot is taken, we carve bricks out of these LVM snapshots, and create a snapshot volume out of those bricks.

- Once the snapshot creation is complete, we unbarrier the GlusterFS volume.

As can be seen in the above diagram, the snapshot creation process has created a LVM snapshot for each LVM, and these snapshots lie in the same thinpool as the LVM. Then we carve bricks (Brick1″ and Brick2″) out of these snapshots, and create a snapshot volume called snap1.

This snapshot, snap1 is a read-only snapshot volume which can be:

- Restored to the original volume test_vol.

- Mounted as a read-only volume and accessed.

- Cloned to create a writeable snapshot.

- Can be accessed via User-Servicable-Snapshots.

All these functionalities will be discussed in future posts, starting with the command line tools to create, delete and restore GlusterFS snapshots.